European Commission Wants Labels on AI-Generated Content -- Now

Large platforms and search engines already face an August deadline to call out manipulated content but are being asked to act even sooner to stop disinformation.

On Monday, the European Commission expressed its desire to see major players in the internet landscape take voluntary action on AI-generated content immediately rather than wait for regulatory deadlines.

Industry stakeholders from Telus International and Manta shared their perspectives with InformationWeek separately after the commission made its intentions known.

What the Commission Wants

During Monday’s press conference, Vĕra Jourová, vice-president in charge of values and transparency with the European Commission, discussed the latest meeting of the taskforce for the European Union’s Code of Practice on Disinformation. She spoke about the implementation of the code and the Digital Services Act, which now includes Facebook, Google, YouTube, TikTok, LinkedIn, and others as signatories, and laid out her expectations for the companies, especially major online platforms, to include consistent moderation and investment in fact-checking of content.

“There’s still far too much dangerous disinformation content circulating,” Jourová said.

The escalation of the EU’s concern was driven by more than just the proliferation of generative AI, though its existence may be accelerating sociopolitical concerns. Jourová said it is not business as usual in the world as states such as Russia foster disinformation, including in the ongoing invasion of Ukraine “The war is not just the weapons but it’s also words,” she said. “The Russian disinformation war against the democratic world already started many years ago after the Crimean annexation.”

Jourová said the EU needs the signatories of the Digital Services Act “to have sufficient capacities in all member states and in all languages” for factchecking of content. She cited the growing capacity for generative AI and advanced chatbots to populate complex content quickly, including images of events that never occurred and the imitation of voices. Jourová asked the signatories to create a dedicated track within the Code of Practice on Disinformation to discuss these matters.

What she wants to see includes safeguards to ensure AI cannot be used by malicious actors to generate disinformation. Jourová also said she wants signatories with the potential to disseminate AI-generated disinformation to put tech in place that recognizes and flags such content, clearly labeling it for users.

“We have the main task to protect the freedom of speech, but when it comes to the AI production, I don’t see any right for the machines to have the freedom of speech,” she said.

Some discussions are already underway. Jourová said she spoke with Google CEO Sundar Pichai about the development of technology fast enough to detect and label AI-produced content for public awareness. Technologies do exist for this, Jourová said, but were also being worked on.

There has been some dissent among large platforms. She said Twitter, under Elon Musk’s ownership, chose “the hard way” with confrontation by withdrawing from the Code of Practice on Disinformation. “The code is voluntary, but make no mistake, by leaving the code, Twitter has attracted a lot of attention,” Jourová said. “Its actions and compliance with EU law will be scrutinized vigorously and urgently.”

She also said if Twitter wants to operate and make money in the European market, it must comply with the Digital Services Act rather than just adhere to other jurisdictions regulations. “European Union is not the place where we want to see an imported, Californian law,” Jourová said.

Though the DSA directs companies deemed to be large online platforms and search engines to label manipulated images, audio, and video possibly by August, Jourová would prefer they not wait. “The labeling should be done now,” she said. “Immediately.”

What Stakeholders Think So Far

The regulatory push might lead to deeper scrutiny of where AI-generated content comes from, down to its data sources. Jan Ulrych, vice president of research and education at Manta, favors the efforts the EU is taking to regulate this space. Manta is a provider of a data lineage platform that offers visibility to data flows, and the company sees data lineage as a way to fact-check AI content.

Ulrych says when it comes to news content, there does not seem to be an effective method in place yet to validate or make sources transparent enough for fact-checking in real-time, especially with the AI’s ability to spawn content. “AI sped up this process by making it possible for anyone to generate news,” he says.

It is almost a given that generative AI will not disappear because of regulations or public outcry, but Ulrych sees the possibility of self-regulation among vendors along with government guardrails as healthy steps. “I would hope, to a large degree, the vendors themselves would invest into making the data they’re providing more transparent,” he says.

The EU’s request that companies essentially do the right thing and act now was not surprising, says Nicole Gutierrez, associate general counsel at Telus International, an IT services company. Given public sentiment about generative AI, taking action might allay some concerns. “Based on the survey we recently just ran, 71% of American consumers think it’s important for companies to be transparent with how they use generative AI,” she says.

Furthermore, 77% of those surveyed, Gutierrez says, want brands that use generative AI to be required to audit AI algorithms to ensure there is no bias or prejudice.

Adding labels per the EU’s request could build trust with the public, she says, an effort that should be supported by regulators and companies alike.

The EU’s current focus on very large online platforms to take action makes sense, Gutierrez says, because those organizations have the resources to respond sooner than smaller companies. “You don’t want to create a chilling effect across the industry where you’re icing out smaller players because they just don��’t have the ability to comply with these regulations,” she says.

That does not mean smaller players will escape future scrutiny and enforcement to label AI-generated content that appears on their platforms. Tiered fines, Gutierrez says, might come into play regulating smaller operators.

Multinational companies face a fragmented legal landscape ahead, she says, when it comes to AI policy. Jurisdictions such as California continue to develop domestic policy in this space while the EU further advances its regulatory stance -- and their policies might not necessarily coincide.

“If the EU is coming out with regulations and requirements that are conflicting with other requirements of other jurisdictions, especially North America … what they may do in one location is compliant but is not compliant in another,” Gutierrez says.

What to Read Next:

Meta Hit with Record $1.3B GDPR Fine

Meta Preps Possible Halt of EU Services Pending Data Ruling

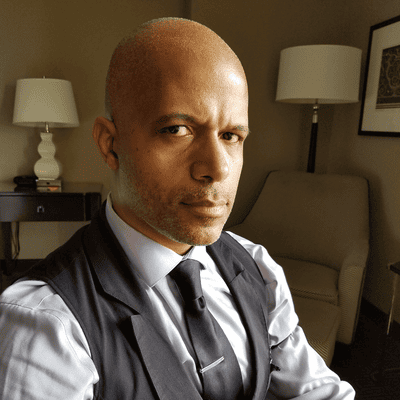

About the Author(s)

You May Also Like

How to Amplify DevOps with DevSecOps

May 22, 2024Generative AI: Use Cases and Risks in 2024

May 29, 2024Smart Service Management

June 4, 2024